This is a guest blog post by Atlassian Marketplace vendor, QMetry.

Tracking and fixing bugs through automation and manual testing processes is becoming increasingly efficient. However efficient it may be to getting code shipped, the downside is, there’s no predictability to it.

The next big thing that will alter the landscape of software testing is Predictive Quality Analytics through Artificial Intelligence (AI) and Machine Learning. As this technology matures, it will not only identify major bottlenecks, bug categories, and improvement areas; it will also suggest improvements based on analytics to majorly improve subsequent test runs and ultimately, software quality.

What is Predictive Quality Analytics?

Predictive quality analytics is the process of extracting useful insights from test data from various sources by applying statistical algorithms and machine learning to determine patterns and predict future outcomes and trends.

This data-driven practice is used to predict bottlenecks, failures, error categories, and productivity drags across testing projects. It helps in determining the future course of action to improve test outcomes, and finally, software quality. Add machine learning and you have the capability to project data and make proactive decisions.

Predictive quality analytics has statistical algorithms at its core. Some common functions it uses include:

- Regression algorithms

- Time series analysis

- Machine learning

The scope of Predictive Quality Analytics

Digital transformation happens when you use digital technologies to fundamentally impact all aspects of business and society, and it’s table stakes for today’s competitive landscape.

This is driving software teams to deliver better software faster. Software quality has a very important role here and there is a consistent demand to shift quality left in the ALM.

How can you achieve this efficiently, continuously and predictably? Predictive Quality Analytics is the answer. Agile and DevOps are at the forefront of the digital transformation process with predictive quality analytics having the potential to impact great change on application lifecycle management.

Here is what leading analysts are saying about Predictive Quality Analytics and Digital Transformation:

42 % of CEOs have begun digital transformation process in their organization in 2017. (2017 CEO Survey: CIOs Must Scale Up Digital Business, Apr. 13, 2017, Forrester Research). By 2020, DevOps initiatives will cause 50% of enterprises to implement continuous testing using frameworks and open-source tools. (Predicts 2017: Application Development, Gartner, Nov. 28, 2016) To keep up with the business demand to deliver applications quickly, efficiently, and of high quality, IT organizations will need to accelerate the shift in focus to advanced analysis. They will need to adopt a fact-driven analytics culture that is pushed down from the CEO and executive team to effectively reduce waste and increase the velocity and quality of the application. (Predicts 2017: Application Development, Gartner, Nov. 28, 2016) 17 % of decision-makers have an analytics budget of $10 million or more. And 48% of firms plan to increase spending on advanced analytics. (Forrester Perishable Insights — Stop Wasting Money On Unactionable Analytics. Use Forrester’s Perishable Insights Framework to Prevent Fresh Data From Going Stale by Mike Gualtieri and Rowan Curran, August 11, 2016)To deliver better quality faster for digital transformation initiatives, there is a need for a technology that can deliver actionable intelligence in real-time. We at QMetry believe that to provide effective predictive and prescriptive analytics, machine learning and Artificial Intelligence (AI) will play the pivotal role.

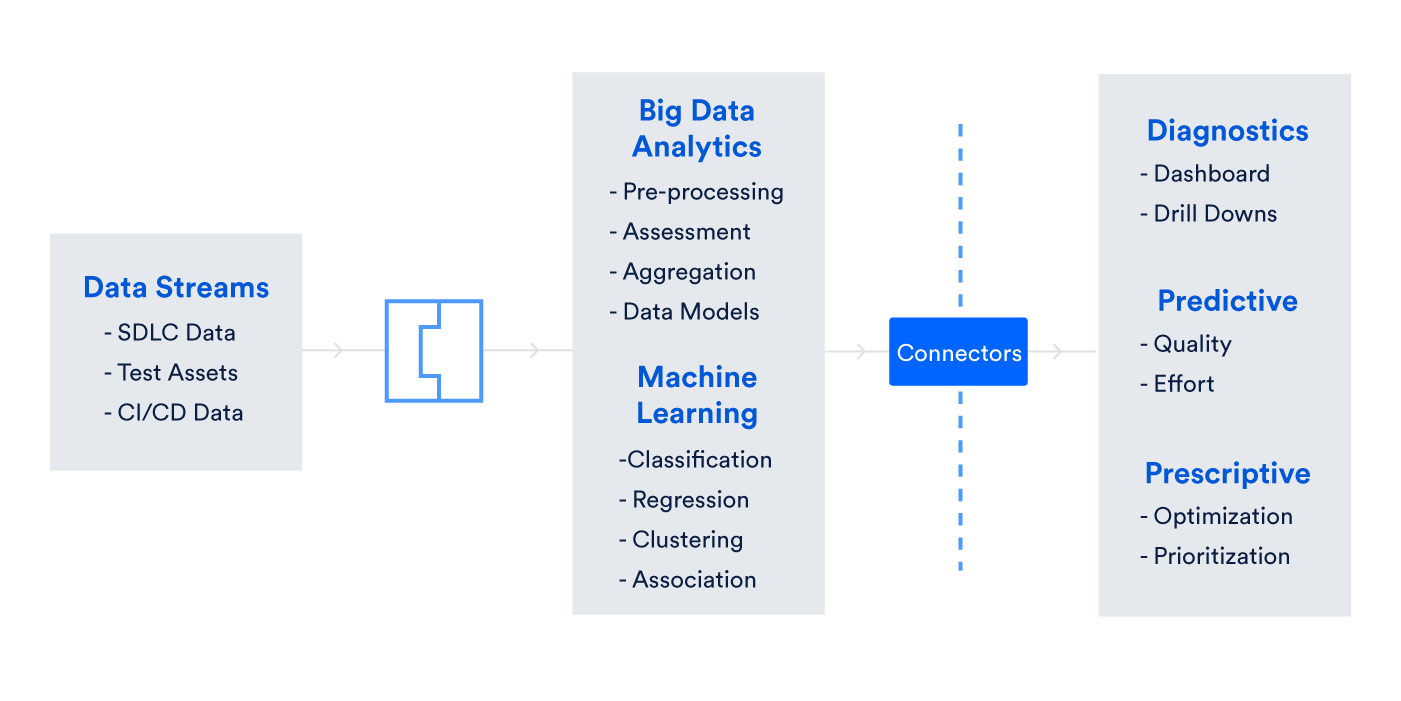

The key challenge for meeting this goal is the incredible amount of unstructured data from various sources. It makes finding key insights and actionable intelligence like finding a needle in a haystack. Also, it is humanly impossible to process. While big data analytics is a key first step here to convert unstructured data into consumable datasets and data models, and generate the diagnostics, it still is not enough to generate predictive analytics.

AI, Machine Learning, and Predictive Quality Analytics

Artificial Intelligence and Machine Learning use classification, regression, clustering, and association of datasets to produce meaningful insights and actionable intelligence in real time. The AI engine uses machine learning algorithms to continuously observe and learn under supervision (guided learning) to achieve set goals or unsupervised learning or through reinforcement learning of trial and error kind. The key outcome from all three is a continuous stream of new learnings and insights.

We already know that the multiple builds and releases of Agile and DevOps require multiple testing cycles, and they churn out big data. Think of a continuous delivery scenario wherein there are thousands of test cases repeated across multiple test executions. For each execution, high volume of test data such as test cases, test results, and CI/CD pipeline results are generated. Also, diverse tools such as Jira Software, Bamboo, Jenkins, QMetry Automation Studio, Selenium, HP UFT, and Cucumber are deployed for managing test and CI/CD projects across testing organizations. They throw out the stream of different unstructured data. With such complex data sources, the scope of analyzing this unstructured data is beyond normal human capabilities.

Big data analytics is the process that assesses, aggregates, and creates data models to develop early insights that help in diagnostics. The focus here is to identify bottlenecks, error categories, productivity index, test coverage, traceability, and other key performance indicators.

The real-time actionable intelligence is delivered through AI & Machine Learning. The structured test data is continuously fed to machine learning algorithms that use techniques like data classification, regression, clustering, and association to predict software quality outcomes. The key performance indicators are reduced time to market and improved software quality. This, in turn, leads to customer satisfaction and major cost savings through effort optimization. As the AI engine matures through continuous learning, it delivers real-time insights that can improve test project outcomes on the go.

Predictive quality analytics aided by AI and machine learning can offer quality insights in seconds or minutes before the usefulness of these insights perish.

Software testing in today’s digitally transformative world has a very demanding role to deliver continuous quality at high velocity and increasing predictability. Predictive Quality Analytics will aid the process by providing continuous actionable intelligence to software testing teams to bring improvements for each test runs.

To experience the magic of predictive quality analytics, try QMetry Wisdom for free.